"PC is better!" and other system superiority arguments

This is the one I have seen the most. This is straight up false. No modern system is per-se better than another one. As you can see in the engine lag section, it is usually due to implementation, not because of the underlying system.

PC input lag is a nightmare!

We have experienced completely different and unstable results because the input lag depends on:

- The actual hardware features (speeds, vsync capabilities, internal architecture etc...)

- Driver versions

- Driver settings

- A lot of unknown variables.

As a matter of fact, some games change their lag values over time because newer hardware changes their capabilities in terms of GPU synchronization. And some of the games we have tested may have unstable lag also because the context switch like an alt-tab introduced additional frame in the pipeline.

We also discovered that the other way around is also problematic, newer games may not support the newer latency settings on old hardware making it impossible to tune those games for low latency.

Consoles have no such issues.

Console input lag can be better than PC

Some games are conceived on console first and then ported to PC. In this instance, the required knowledge to have a decent

PC port is lacking, resulting in a worse input lag on PC. As an example: the BlazBlue series has always had a very stable

2.5f of lag on PS4. Once ported on PC, using the default driver settings we get 5.5f of lag. With some tuning we go down

to 3.5f. It needs some hacking (e.g. tuning the DirectX context) in order to have the original lag.

Beware of the controllers

The PS3 was notorious to be a full frame slower. Nowadays, we know that the controllers that were available on PS3 at the

time had 10ms polling rate and a terrible internal sampling rate, this may have contributed a lot to this.

Why don't you display PC results?

Same reason as previously stated. I have two PCs and I have different results (sometimes +2 frames!) even with the right configuration. Showing off results the same way I do for other system would be completely misleading.

I heard that the XBox One S and X have a better input lag, is it true?

It is technically true. But the only feature they support contrary to Sony's Playstation is VRR (see dedicated part in the display section)

and ALLM. The latter is a feature instructs the display to switch automatically to game mode (if your TV supports it).

If you have already configured this yourself, then you will not have any advantage.

Can a LCD screen be as fast as a CRT?

Yes and no. Nowadays, you can find LCD screens that have a latency around +2ms (~10ms middle of the screen). This is

very close to CRT.

However, LCDs are bad when it comes to sharpness at high speed. So, if you measure on a high speed camera the image will contain ghost artifacts compared to CRTs. Please consult this article if you need more intel on that.

What are the right settings on PC?

On PC you have access to drivers settings of your GPU to address. The impact of each settings may vary because if you have a game optimized for latency, they will have basically no consequences.

Standard low latency settings

First of all: you need to be in exclusive full screen otherwise, most of the settings will be ignored and you will add one frame of latency.

Secondly, it is assumed here that you are running a game on a faster enough system that is able to render frames fast enough. otherwise you will have frame drops.

Finally, if you don't care about the tearing, switch off Vsync. End of the story.

Now, assuming that you want Vsync the easy "fix" is to set:

- Find the

Max Pre-rendered FramesorFlip QueueorLow Latency Modesettings and use1(oron). - Find the

Triple Bufferingsetting and disable it.

This is applicable for all DirectX 10+ and OpenGL games. At this point, you are basically in the console lag realm.

Warning: this may NOT fix any issues in a game developed with DirectX 9 (as I write this, drivers may be updated at some point to do it...).

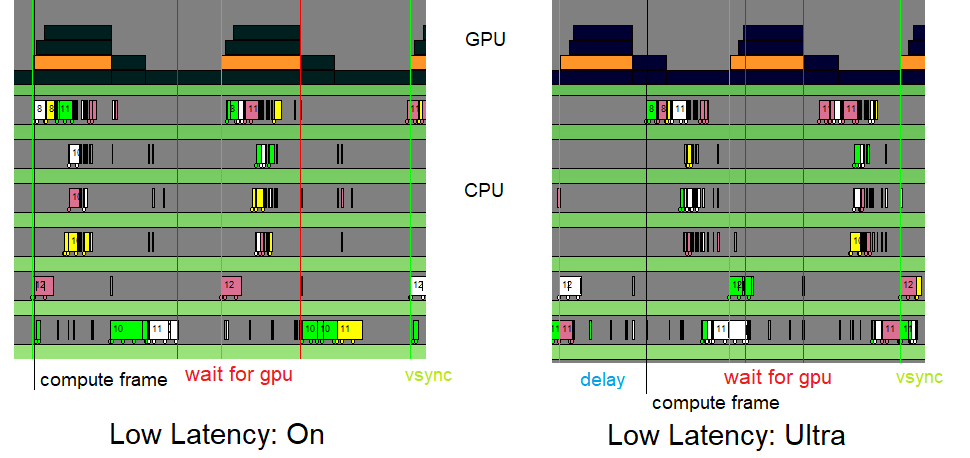

Ultra Low Latency Mode

A new feature has appeared in mid-2019 on AMD and Nvidia drivers in the Low Latency section. This feature tricks

the game by delaying the CPU (and therefore the input reading) compensated by a lower GPU waiting time, as demonstrated

in the following debug trace:

The added delay is half a frame. Therefore we have the following results:

- It can give up to a 0.5f boost in input lag.

- Your game MUST be able to render a full frame in less than half the refresh rate. Otherwise you will have frame drops!

Fast Sync

In the Vertical synchronization option, if your hardware supports it you have an option called Fast or Enhanced.

This feature basically simulates a Vsync Off and then manage the flip queue to render only the latest frame on Vsync.

- It avoids any clogging in the GPU pipeline and have faster input lag than standard Vsync, even on older games on DX9.

- Your CPU will consumes more resources because it may prepare multiple frames per seconds

- You will have unstable lag because the CPU and GPU are completely de-synced.

Beyond...

If you wish to go beyond that. Good luck, but it is possible.

But there are ways to do that using a program like RTSS.

It enables the player to delay the vsync tearing up and down at the cost of risking a dropped frame here and there.

Basically the idea is that you tune the GPU synchronization yourself at this point.

There are a lot of different tutorials out there on how to tune this software for the best result with variable results.

Can I overclock my USB polling to 1ms?

It is possible on PC but it needs some expertise using tools like these. Run it at your risks.

How can I get the fastest screen latency?

There are a few settings that you must tweak. Firstly, if you have VRR and your system handles it, activate it.

Secondly, enable the "game mode" of your monitor or TV. It will disable post-processing.

Finally, if your experience is still poor, consult DisplayLag who has measured a LOT of displays for you.

On modern consoles: does the 720p/1080p setting changes the lag?

Nope. We tested this thoroughly nothing changes. Moreover, the game rendered in a fixed 1080p, it is just downscaled to fit to your screen if necessary.

What about 4K and pro consoles?

On some consoles you can adjust the render to 4K resolution on games that supports this feature. Similarly, on PS4 pro you also have a Supersampling setting: it will force the internal resolution to 4K and downscale afterwards for a better quality on 2K displays for instance.

It will generally have no impact on the input lag, however in rare cases of a game with bad GPU synchronization, because the 4K render takes more time, it may change the synchronization (for better or worse).

Is the stability metric actually important?

Yes and no.

- Yes: because it shows how latency is felt by the user. And it can showcase bad GPU synchronization problems (like vanilla SFV).

- No: because if the game engine runs internally at 100fps while the render is at 60fps, you will see a low stability and that is to be expected. This exists also on old games like Street Fighter II Turbo which runs at 90fps internally.

Does the insert-device-here lag?

Most of the time it is not possible to introduce lag because introducing lag requires a complex computing mechanism. And if you are buying something cheap that handles 4K resolutions, surely it has not the horse power to retain this amount of data.

So, we have the following results

- USB / HDMI Repeater: no lag.

- USB HUB: unless there is a very old one it will support your USB 2.0 at full speed.

- HDMI splitter or switch or audio extractor: unless it is something very expensive with overlays and such, no lag.

- Video capture passthrough: no lag.

- Video converter: tricky. Usually cheap converters like HDMI to Component are lag-less. But cheap upscalers like SCART to HDMI will lag at least one frame behind. Converting legacy video to HDMI requires actually a lot of processing. If the converter is purely analog, there won't be any lag.