Game Development Primer

In order to expose how a game introduces lag, we have to first explain in a nutshell how a game is conceived how the hardware behaves.

The main loop

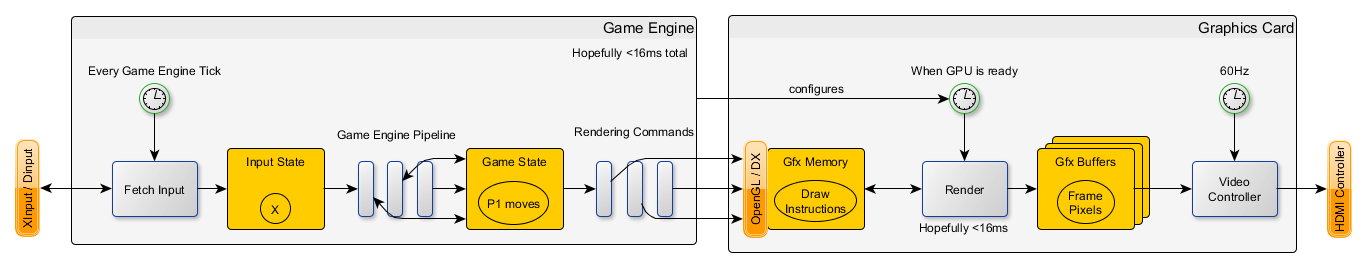

Every game is created with in its core somewhere: a loop. This loop says that on every game tick (a fixed or flexible clock), the game reads events from the player, treats them and then renders it on the screen.

Those steps are definitely not atomic, and it is basically a large succession of actions. For reference, here is a link to the main execution sequence from Unity.

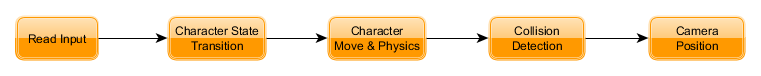

If we look at only what is interesting for us here, we can simplify it in simple blocks:

- Read an input. This will be performed using one of the operating system interface like

DirectInput,XInputor similar. - Execute a set of tasks to update the game state (gameplay engine)

- Send a set of rendering commands (rendering engine). These will be issued in a graphics API

like

OpenGL,DirectXorVulkan. - Wait for the next game tick

In modern game engines, there may be parallelization involved, so the gameplay and rendering may run on different clocks on separate contexts so it may be more complex but the gist of it is there.

The gameplay engine

The role of this engine is to execute all the main logic of the game. It is executed on the CPU, and it is composed of a set of subroutines which are intertwined. Here is a very simple example of a set of steps that the gameplay engine is composed of:

First the input is read, it will maybe be put in a buffer to detection motions or transmit it through network. Then, we can take some actions like changing the main character from a resting pose to a walking state for instance. We can continue as much as we need.

The rendering

This engine behaves in a completely different way. Because all the rendering is usually done in the graphics processor, this engine will issue a set of commands that will ultimately result in: a 3D scene computation and most importantly a frame to display.

Contrary to the gameplay engine, the rendering will only prepare a set of commands, those commands will not be sent to the GPU synchronously. The rendering commands will be executed when the GPU decides to do so.

The frame buffers and a video transmission primer

Once the rendering is done, a ready-to-display frame is available. In the graphics card, there are a set of frame buffers

that can be piled up. The role of the graphics controller is to take them as a queue and send the frames one at a time,

one pixel at a time, from top-left to bottom-right through a cable like HDMI. When the controller has finished sending

all pixels, it goes in a phase called the vertical sync and it continues. There are two major modes to select the

frame buffer that is transmitted:

vsync on: wait forvsyncto select the next frame buffer available in the queuevsync off: select the next frame buffer as soon as possible at the cost of having tearing.

If no frame buffer is available, the previous frame is used, that's what a "frame drop" is in more details.

In conclusion, we can see the whole engine chain in the following drawing.

How lag is introduced in the gameplay engine?

We have properly described how a game engine is structured in a general sense. Now, we can dig into the causes for lag.

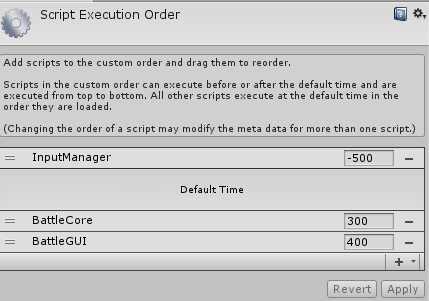

Pipeline ordering faults

This first issue and the simplest one to fix is the ordering. As we have seen previously, the gameplay engine is composed

of a series of subroutines. Unfortunately, those steps have inter-dependencies and the order of execution will definitely

impact the result.

This first issue and the simplest one to fix is the ordering. As we have seen previously, the gameplay engine is composed

of a series of subroutines. Unfortunately, those steps have inter-dependencies and the order of execution will definitely

impact the result.

This can have major impacts without being visible. Let's say for the sake of the example that we put the input read step after the character reaction on our previous example. The game will continue to work without flaws, BUT the game will add one game tick of lag (due to the fact that the input will not be taken into account for one game tick).

On Unity for instance, this is decided by the Script Execution Order panel. If you don't assign priorities in this

panel, the scripts will be executed in the order as they are loaded which may be dangerous!

Clock management and stability

Similarly, when the engines are not well understood, it can be easy to confuse game tick clock and other clocks like the renderer or alternative clocks like the physics engine. Often, pre-made engine will come with two major clocks: the internal game clock and the rendering clock. Therefore, some routines will be fired once per frame while other will be executed zero or multiple times per frame depending on the configuration.

If the game tick time runs on a fixed step clock that is higher than the frame time, then your game will lag more than necessary.

It is not uncommon to see settings with a fixed step of 20ms

which makes the internal game run at 50fps. This MUST be fixed early in the development because it will have gameplay impact.

The second impact that such complete decoupling will introduce is an instability when it comes to input lag. The stability is the probability of having the same lag with the same video timing. If clocks are too much decoupled, then there will be instabilities.

Input buffer strategies

It may be useful to allow your gameplay engine to lag one tick just to make an input buffer. For instance, if your gameplay has a feature where an action is triggers by pressing two buttons at the same time, you need to introduce a certain kind of input leniency. Otherwise, getting the button presses exactly at the same time is hard to perform. A naive implementation would delay the input for one frame to confirm the two buttons press.

However, try to find more creative solutions around this issue like allowing the cancel of the previous action for one tick.

How lag is introduced during the rendering?

GPU sync will surely be the major issue depending on your configuration. It may be the more complex to fix (hardcore gpu pipeline debug) or the simplest (call a function to set a setting).

GPU synchronization introduction

As we have seen, the GPU is by nature asynchronous, meaning it will execute its commands when it wants. This means that if the game and the GPU are way out of sync, then the lag can accumulate to a point of being unbearable.

The concept to correctly grasp is that the GPU and the CPU are on separate timeline and they can execute things in parallel. So, in the best case, the GPU will execute the rendering of one frame while the CPU is working on the next.

If this feels natural it should not. Here in this configuration, there is a large pause (several milliseconds) between the time

when the rendering commands are issued and the time when the actual frame is computed. Assuming that the render takes 16.6ms, and that

everything is properly synced,

if the input appears between game update #0 and #1, the reaction frame will start its display during Game Update #3.

Thus, this pipeline has a 2.5f average of lag (the additional 0.5f comes from the fact that the input can

appear anywhere during #0 and #1).

In this configuration, there exists one trick to reduce latency a bit. If we wait a bit before the game update, we can shift the top lane to the right, and therefore reduce the time between the input (beginning of the game update) and the end of the frame render. The risk of doing this is to make the GPU idle due to the CPU waiting too much.

Conversely, if we have a slow game update, then the pace is now dictated by the CPU update speed and the GPU will be idle and the timings of the frame buffer productions are different. In the best case scenario you will get this rendering.

In this configuration, the aforementioned trick will not help you. It will just wait more, and therefore delay the rendering command, thus delaying your frame production, the delay between the beginning of the game update and the end of the render will always be the same.

Queue Management

You can notice that the video controller is absent from the previous drawings. It's because it is yet another separate component of the video production with it's own timeline.

Assuming that the CPU is able to churn out two full frame render commands during one displayed frame the real question becomes: does the queue continue to grow? Obviously not. What the driver will do is that it will put a bound on the number of elements in queue. If the bound is met, tell the CPU to wait before accepting new commands.

The real question now becomes: what do we wait for? The answer depends on the context and we need to go deeper in how a GPU and how its driver works. In the next drawing, we can see three main components: the program, the driver context, and the GPU.

In all the commands that the GPU can receive, there is one in particular that tells the GPU to flip its next back buffer to

the front. In OpenGL this can be done using SwapBuffers, and in DirectX this can be done using one of the Present

functions. The implementation of each differs and the behavior of the driver may vary.

Now, the system becomes a concurrent system with queues and a set of locks and constraints to guarantee. Here are some of the constraints that can apply depending on your configuration:

- There must be less that

Nflip in queue - There must be less than

Nunrendered frames in the queue - The flip can be performed only on Vsync (or not)

- There is only

Nframe buffers available - The flips can't be dismissed (or not)

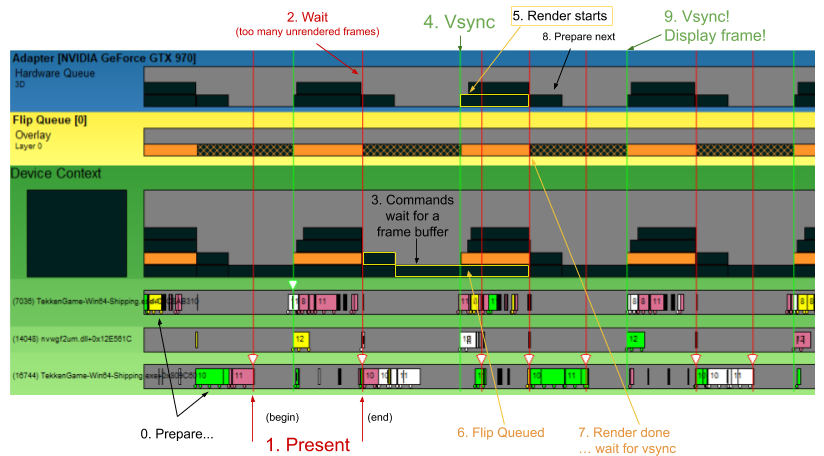

In order to illustrate the impact of each component. Here is an example of a debug tool (gpuview) on Tekken 7 using the

following settings:

- The flip must happen during vsync (aka

vsync on). - There must be less than

1unrendered frame in the queue (akalow latency onormax pre-rendered frames: 1). - Only

1back buffer is available.

Now you should be able to see exactly how each queue blocks each other. Spend some time on this and use the previous

drawing to help you follow each steps.

A lot of this is counter intuitive from a programmer's point of view mainly because when the

Present call ends, the frame is far from being displayed.

The worst that can happen in this scenario would be to have a set of clogged queues with frames waiting to be displayed. Please note that we present here ways to have the lowest latency. However, if it is frame rate stability that you want, you may be interested to have 2 back buffers or a larger flip queue. For instance, a triple buffering (1 display, 2 queued) will imply that you can compute the frame earlier to be prepared for a frame stutter. If it is earlier, then it means that the time before the input read and the display is structurally larger.

Knobs to turns

This section is written using WydD's knowledge on graphics APIs, if you spot a mistake or if you have knowledge on popular game engines like Unity or Unreal, feel free to reach out.

If you are using lower level APIs like Vulkan, DirectX 12 (Windows), Gnm (Playstation) or Metal (iOS, macOS),

then you can (or have to) program this kind of queue management yourself and tune each condition like you want.

But that will require more knowledge from your part.

OpenGL

Conversely, OpenGL does not have a lot of knobs it can turn other than the vsync behavior. For instance, even simple

things like triple buffering (meaning: having two back buffers) is tuned at the driver level and OpenGL has

no control over it.

Direct3D 9

If you use a non-EX version of Direct3D there is not a lot that you can configure yourself and GPU sync may be done using high precision timers only. You can still tune the number of back buffers during the CreateDevice.

If you are using the 9Ex version, even if you are using the Blt mode you have access to some tuning:

- Same as the non-Ex you can control the amount of back buffers during the CreateDevice.

- Device SetMaximumFrameLatency:

Controls the maximum amount of

Presentorders that are available in the device context. The default is 3 frames - If you are using the backported Flip model use PresentEx flags which can allow you do dismiss some flips if necessary (do read the section about the detection of glitches).

In Blt mode, the Present are synced using fences and tokens, leaving the Flip Queue with never more than one entry.

The consequence is that modern driver will never throttle your application because it usually monitors the Flip Queue and not the device context.

SetMaximumFrameLatency is there to properly adjust the desired behavior.

DXGI (DX10+)

The DXGI model uses swap chains to implement the flip model.

Use this documentation to understand how to properly time and detect issues in your synchronization. Other than what is

referenced in the aforementioned page, there is still the previous options now attached to SwapChains:

- The number of back buffers is handled in the Swap Chain creation.

- SwapChain SetMaximumFrameLatency: Controls the maximum amount of entries in the Flip Queue. The default is 3 frames

Vsync Tuning

As we have seen previously, there are two major configurations: vsync on and vsync off. If you look a the previous

charts, it should be now clear that latter option will have a faster lag. It can be controlled on every framework either

during the creation of the context or during the swap.

This behavior can have different tunings on modern hardware using technologies like Fast Sync (NVidia) and Enhanced Sync (AMD).

The raw idea behind this is the following:

- Never lock the CPU and let the frame render orders come

- When

vsyncarrives, pick the most recent for the next render and discard all the others

Assuming that the engine largely supports rendering at a higher speed than the screen refresh rate, this sync setting can improve the latency but may deteriorate the stability, unfortunately it is impossible to control from the game's perspective.

Similarly, you should be able now to understand how having vsync off will drastically improve

your input lag if the rendering is faster than vertical sync. But now, you are not only sacrificing fluidity

you're also sacrificing display quality by allowing tearing. Variable refresh rate (FreeSync, G-Sync) can compensate

for this to an extent.

Conclusion & Recommendations

This page detailed a set of knowledge to have when you are developing a game engine or even if you are using one as is:

- Be careful on your clock management and script execution order. If you are not aware of those, you are probably doing something wrong.

- Understand how the GPU works and configure properly the frame buffer queue. Again, if you just trust the default settings, chances are you are not doing this right.

- If you are using a complex environment (with parallelization): make sure your locks and fences are in sync.

- Test everything in

vsync onat 60 fps. Especially if you are early in the development, the render times and the game updates will be very fast. So by usingvsync offthe game loop will execute several times per vertical sync, hiding execution order issues and synchronization problems. - Use tools like gpuview extensively. Don't be in the blind, you need to see if clogging happens or not. Don't trust drivers and documentation, experiment yourself.

- If your gameplay allows it, enable higher FPS. By correctly managing your clocks, you can have

a higher frequency game loop. This allows technologies like

VRRand alternative sync strategies to kick in. - Your main goal is 2.5 frames of input lag. To go under this limit you need to have a low-level GPU synchronization.